In this post I will work on the CIFAR-10 dataset, collected by Alex Krizhevsky, Vinod Nair, and Geoffrey Hinton. The images can be downloaded here. Also, find my python notebook(.ipynb) here.

Dataset

First, let’s talk a bit about how our dataset looks like. From the downloading source, we are provided with five training batches and one test batch, each with 10000 randomly-selected images from each class.

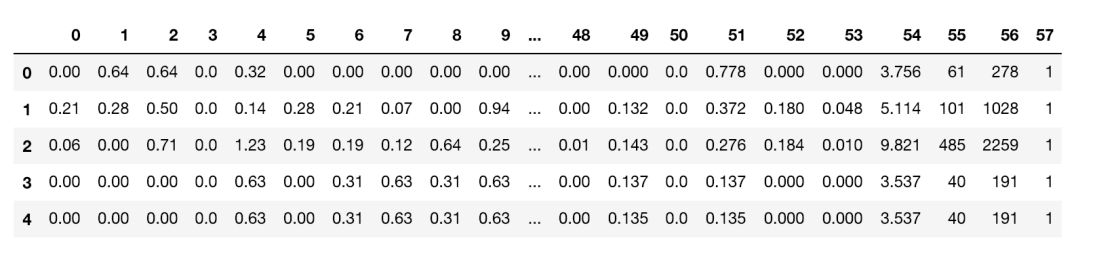

A quick glimpse over the dataset gives us this:

Transformations

Tensorflow might not be the most Deep Learning framework to work with. For this reason, in this blog post I will present a solution using TensorFlow eager execution. For more details, read here.

So, Transformations. Why do we need them? Deep learning models are known to be especially effective and produce very good results. Though, one downside is that they are usually very data hungry. Therefore, given that we have too little data, we apply data transformation over them, and use them in training.

Now, let’s get our hands dirty. “Talk is cheap, show me some code!” (Linus Torvalds)

First, we provide ourselves with all the imports needed:

import tensorflow as tf import pickle import pandas as pd from sklearn.model_selection import train_test_split from keras.preprocessing.image import ImageDataGenerator from keras.datasets import cifar10 import numpy as np import math import matplotlib.pyplot as plt

For ease of work we enable eager execution.

tf.enable_eager_execution()

Now, we use the [1] implementation to load the dataset.

X_data1, y_data1 = load_cfar10_batch("cifar-10-batches-py", 1) # load the dataset

X_data2, y_data2 = load_cfar10_batch("cifar-10-batches-py", 2) # load the dataset

X_data3, y_data3 = load_cfar10_batch("cifar-10-batches-py", 3) # load the dataset

X_data4, y_data4 = load_cfar10_batch("cifar-10-batches-py", 4) # load the dataset

X_data5, y_data5 = load_cfar10_batch("cifar-10-batches-py", 5) # load the dataset

X_data = np.concatenate((X_data1, X_data2, X_data3, X_data4, X_data5), axis=0)

y_data = np.concatenate((y_data1, y_data2, y_data3, y_data4, y_data5), axis=0)

y_data = tf.Variable(pd.get_dummies(y_data).values) # hot-encode categorical

Next, we create a function to nicely display the original image and its transformations.

def show_image(original_image,translated, cropped_resized, transformed, title="Data transformations"):

fig=plt.figure()

fig.suptitle(title)

original_plt=fig.add_subplot(1,4,1)

original_plt.set_title('original')

original_plt.imshow(original_image)

transformed_plt=fig.add_subplot(1,4,2)

transformed_plt.set_title('transformed')

transformed_plt.imshow(transformed)

cropped_resized_plt=fig.add_subplot(1,4,3)

cropped_resized_plt.set_title('rescaled')

cropped_resized_plt.imshow(cropped_resized)

translated_plt=fig.add_subplot(1,4,4)

translated_plt.set_title('translated')

translated_plt.imshow(translated)

plt.show(block=True)

Data Transform

We now apply the three transformations and merge everything in a bigger data array. We also take into account the size of the labels dataset.

data = X_data label = y_data translated = tf.cast(tf.contrib.image.translate(data, translations=[5, 5]), dtype=tf.uint8) cropped_resized = tf.cast(tf.image.crop_and_resize(data, boxes=[[0.0, 0.0, 0.89, 0.89]]*X_data.shape[0], crop_size = [32, 32], box_ind=np.arange(X_data.shape[0])), dtype=tf.uint8) transformed = tf.cast(tf.contrib.image.transform(data, [1, tf.sin(-0.2), 0, 0, tf.cos(-0.2), 0, 0, 0]), dtype=tf.uint8) merged = tf.concat([data, translated, cropped_resized, transformed], axis=0) labels = tf.concat([label, label, label, label], axis=0)

Visually, a transformed images look as follows:

show_image(data[42], translated[42], cropped_resized[42], transformed[42])

Data Split into train and test

We use train_test_split from sklearn over the transformed dataset. We return training and testing samples and then we pickle them for future use.

X_train, X_test, y_train, y_test = train_test_split(np.array(merged), np.array(labels), test_size=0.33, random_state=42)

And, because we want to use that in future, let us save transformed data in a pickle file.

output = open('data.pkl', 'wb')

pickle.dump(((X_train, y_train), (X_test, y_test)), output)

Was quite a long way till now. But we did it! Hooray! 😁 Although it is not a complicated stuff, it took me quite a while to play with it. For this reason, I decided to share it with the world. Also, find the python notebook(.ipynb) here.

References

1. https://towardsdatascience.com/cifar-10-image-classification-in-tensorflow-5b501f7dc77c, accessed online June 23rd, 2010